Garment Animation NeRF with Color Editing

- PCA Lab

We introduce a novel Garment Animation NeRF that generates character animations directly from body motion sequences, eliminating the need for an explicit garment proxy. Upon training, our network produces garment animations with intricate wrinkle details, ensuring plausible body-and-garment occlusions and maintaining structural consistency across views and frames. We demonstrate the network's generalization capabilities across unseen body motions and camera views, while also enabling color editing for garment appearance. Notably, our method is applicable to both synthetic and real-capture garment data.

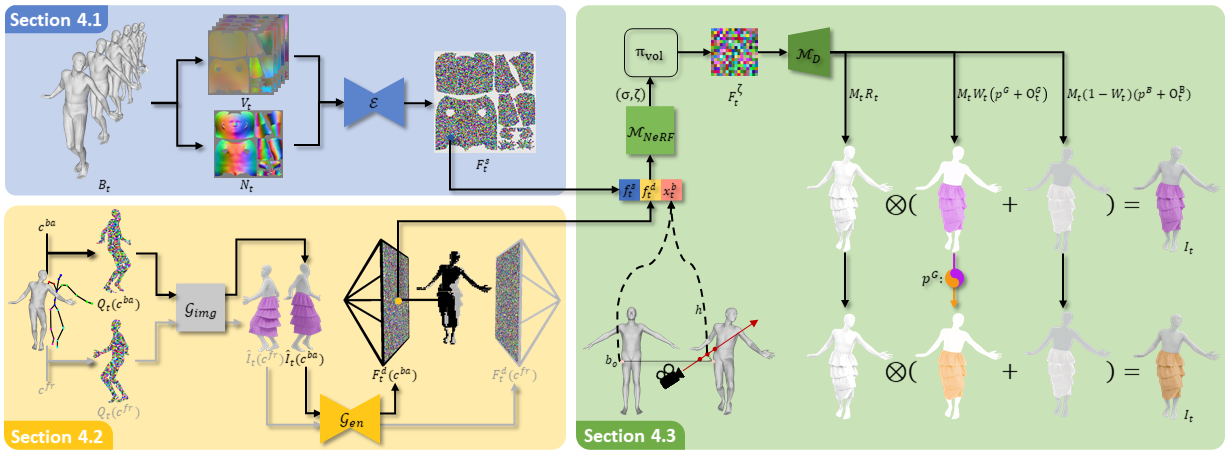

Method Overview

Given a sequence of character's body motion, we construct a neural radiance field to render animation of the character dressed in the target garment. We first employ a dynamic feature encoder &mathcal;E to infer garment dynamic feature map Fts from the information textures Vt and Nt of the body motion Bt. Simultaneously, taking body neural texture images Qt of the body at front cfr and back views cba, we use a pre-trained image generator &mathcal;G;img to predict reference images &hat;I;t(cfr) and &hat;I;t(cba). Subsequently, we use the detail feature encoder &mathcal;G;en to generate the detail feature maps Ftd(cfr) and Ftd(cba). Then, we obtain body-aware geometric information xtb by calculating the distance h between sampling points and the body surface, and finding bo on the canonical body shape. Finally, we utilize a NeRF network &mathcal;M;NeRF to render garment appearance feature image Ftζ. To enable color editing, we introduce a network &mathcal;M;D to decompose the garment appearance into a front mask Mt, a color offset map Ot, a radiance map Rt and a blending weight map Wt. By linearly recombining those visual elements, we synthesize the final frame image It. Except for the generator &mathcal;G;img, we jointly train the networks of &mathcal;E;, &mathcal;G;en, &mathcal;M;NeRF and &mathcal;M;D in an end-to-end manner.