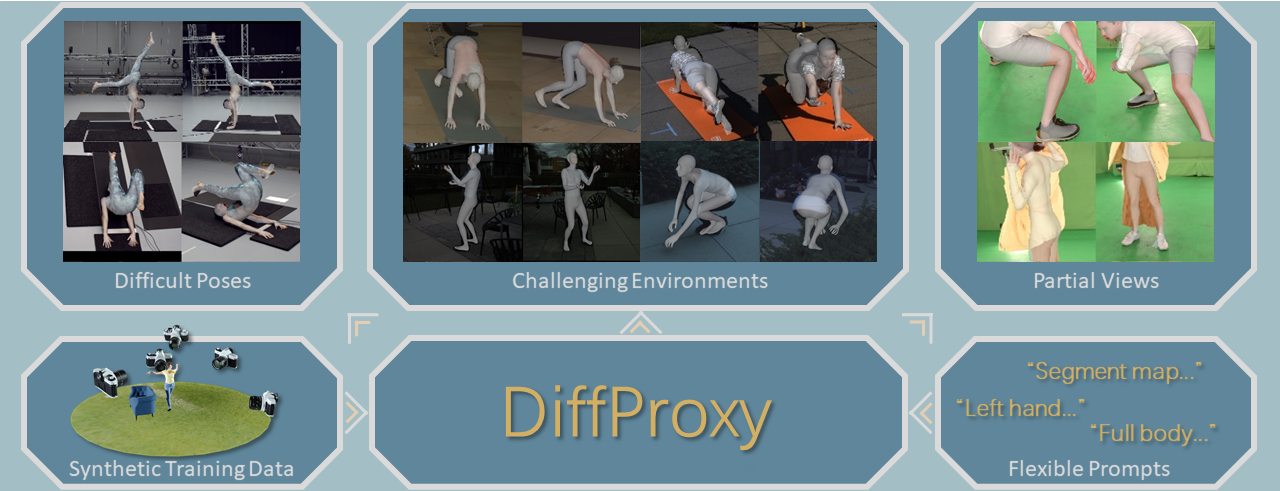

DiffProxy is trained exclusively on synthetic data and achieves robust generalization to real-world scenarios. Our framework accepts diverse prompts (visual and textual), handles difficult poses, generalizes to challenging environments, and supports partial views with flexible view counts. Three key advantages: (i) Annotation bias-free—training on synthetic data avoids fitting biases from real datasets; (ii) Flexible—adapts to varying view counts, handles partial observations, and works across diverse capture conditions; (iii) Cross-data generalization—achieves strong performance across unseen real-world datasets without requiring real training pairs.